由于众所周知的原因(F**K 红X),CentOS8的生命周期就快结束了,系统要转入Ubuntu的怀抱了,不过还好所有的应用都扔到kubernetes上了,迁移的难度大大降低。近期在做Ubuntu的测试,正好把在ubuntu 20.04 LTS 上部署最新的kubernetes记录下来

基础环境:

系统:ubuntu 20.04 LTS

master:172.19.82.170

slave1:172.19.82.192

slave2:172.19.82.160

基础组件:

$ sudo su# apt update && apt upgrade -y && init 6

# apt install -y net-tools lrzsz htop

配置时间并令时间戳立即生效

# timedatectl set-timezone Asia/Shanghai && systemctl restart rsyslog

设置hosts解析:

# hostnamectl set-hostname master && bash

# hostnamectl set-hostname slave1 && bash

# hostnamectl set-hostname slave2 && bash

# cat >> /etc/hosts<< EOF

172.19.82.170 master

172.19.82.192 slave1

172.19.82.160 slave2

EOF

关闭SWAP(过于简单就不写了)

关闭selinux和防火墙 (过于简单就不写了)

修改内核参数:

# cat >> /etc/sysctl.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.max_map_count = 262144EOF

# modprobe br_netfilter && sysctl -p /etc/sysctl.conf

设置rp_filter的值

kubernetes集群如果使用的是calico网络插件,那么要求下面两个内核参数为0或者1,ubuntu20.04默认是2

# vim /etc/sysctl.d/10-network-security.conf

net.ipv4.conf.default.rp_filter=1

net.ipv4.conf.all.rp_filter=1

# sysctl –system

安装docker-ce

# apt remove docker docker-engine docker.io containerd runc

# apt-get install ca-certificates \curl gnupg lsb-release

# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# apt update

# apt install docker-ce docker-ce-cli containerd.io

# systemctl enable docker

# mkdir /etc/docker/

# vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"]

}

# systemctl restart docker

安装kubernetes 1.22

# apt install ca-certificates curl software-properties-common apt-transport-https curl

# curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

# cat >> /etc/apt/sources.list.d/kubernetes.list <<EOF

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

# apt update && apt install -y kubelet kubeadm kubectl

# apt-mark hold kubelet kubeadm kubectl # 如果需要锁定版本使用这个命令

# apt-mark unhold kubelet kubeadm kubectl # 解锁

# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.2", GitCommit:"8b5a19147530eaac9476b0ab82980b4088bbc1b2", GitTreeState:"clean", BuildDate:"2021-09-15T21:37:34Z", GoVersion:"go1.16.8", Compiler:"gc", Platform:"linux/amd64"}

# systemctl enable kubelet

修改配置文件 # 只在master节点操作

# kubeadm config print init-defaults > kubeadm.yaml

# vim kubeadm.yaml 修改&新增4处

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.19.82.170 # 修改为master节点IP

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 修改为阿里云源站

kind: ClusterConfiguration

kubernetesVersion: 1.22.2 # 修改为正确的版本号,原来是1.22.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 # 新增

serviceSubnet: 10.96.0.0/12

scheduler: {}

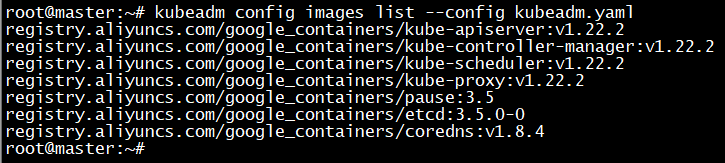

查看需要的镜像 # 只在master节点操作 # kubeadm config images list --config kubeadm.yaml registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.2 registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.2 registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.2 registry.aliyuncs.com/google_containers/kube-proxy:v1.22.2 registry.aliyuncs.com/google_containers/pause:3.5 registry.aliyuncs.com/google_containers/etcd:3.5.0-0 registry.aliyuncs.com/google_containers/coredns:v1.8.4

拉取镜像 # kubeadm config images pull --config kubeadm.yaml [config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.2 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.2 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.2 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.22.2 [config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.5 [config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.0-0 [config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.4

初始化集群 # 只在master节点操作

# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.2

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 127.0.0.53:53: server

························

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

·····················

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node NotReady control-plane,master 34m v1.22.2

添加slave节点到master集群中 # 在两个slave节点执行

# kubeadm join 172.19.82.170:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:7600dfe96c4aed7cf86a4d43c8ab1653b642f0xxxxxxxxxxxxxxxxxxxxx

# kubectl get nodes # 在master节点查看 NAME STATUS ROLES AGE VERSION node NotReady control-plane,master 39m v1.22.2 slave1 NotReady <none> 2m50s v1.22.2 slave2 NotReady <none> 113s v1.22.2

安装flannel插件

# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# vim kube-flannel.yml

在下面增加配置,指定具体的网卡,尤其是在多网卡环境,默认是第一块网卡

args:

- --ip-masq

- --kube-subnet-mgr

resources:

变为

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=ens3 # 指定具体的网卡

resources:

# kubectl create -f kube-flannel.yml

# kubectl get nodes NAME STATUS ROLES AGE VERSION node Ready control-plane,master 63m v1.22.2 slave1 Ready <none> 26m v1.22.2 slave2 Ready <none> 25m v1.22.2

这样环境就OK了

最后忘了一步,开启IPVS,这里补上

开启IPVS

# kubectl -n kube-system get cm

NAME DATA AGE

coredns 1 15d

extension-apiserver-authentication 6 15d

kube-flannel-cfg 2 15d

kube-proxy 2 15d

kube-root-ca.crt 1 15d

kubeadm-config 1 15d

kubelet-config-1.22 1 15d

# kubectl -n kube-system edit cm kube-proxy # 直接修改配置文件

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: ""

nodePortAddresses: null

改为

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: "ipvs"

nodePortAddresses: null

# kubectl get pods -n kube-system

# kubectl -n kube-system delete pod kube-proxy-b58gv kube-proxy-dlmns kube-proxy-z4bpq # 删除三个kube-proxy

# apt install ipvsadm # 安装ipvsadm

# ipvsadm -Ln # 查看ipvs

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:80 rr

-> 10.244.1.21:80 Masq 1 0 0

去除Master节点污点:

# kubectl get nodes NAME STATUS ROLES AGE VERSION node Ready control-plane,master 16d v1.22.3 slave1 Ready worker 16d v1.22.3 slave2 Ready worker 16d v1.22.3 # kubectl describe nodes node | grep Taints Taints: node-role.kubernetes.io/master:NoSchedule # kubectl taint node node node-role.kubernetes.io/master- node/node untainted # kubectl describe nodes node | grep Taints Taints: <none>

命令补全:

# apt install bash-completion # source /usr/share/bash-completion/bash_completion # source <(kubectl completion bash) # echo 'source <(kubectl completion bash)' >>~/.bashrc